What Is The Vanity Fair

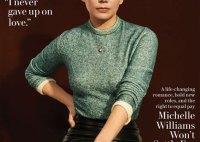

What Is Vanity Fair? Vanity Fair is a magazine published by Condé Nast, a media company based in New York City. The magazine was founded in 1913 by Condé Montrose Nast and Frank Crowninshield and has been published continuously since then. Vanity Fair is one of the most widely read magazines in the world, with a circulation of… Read More »